Post Author

Outlier AI markets itself as a flexible platform for people to contribute to AI development while earning money remotely. At first glance, it seems like an opportunity for anyone to join the growing AI ecosystem, work on short tasks, and gain compensation without committing to traditional employment. The reality for many workers, however, is far more complicated and far less forgiving. Beneath the surface lies a relentless performance re-ranking system, opaque task allocation, unpaid onboarding, and unpredictable income. Workers describe stress, anxiety, and feelings of invisibility while the company scales its AI models at speed.

Outlier AI offers short, discrete tasks that contribute to machine learning models. Tasks may involve labeling images, verifying text, or reviewing AI-generated content. In promotional material, the platform emphasizes flexibility, accessibility, and earning potential. It appears especially appealing to students, freelancers, or people in regions with limited employment options.

Across platforms, workers describe the early excitement of the platform. One user wrote,

“It seemed perfect at first. I could work a few hours each day and earn some extra cash. I never imagined it would turn into constant anxiety about performance.”

The concept is simple: complete tasks, receive points or monetary compensation, and unlock more work based on accuracy and speed. However, simplicity in design masks a complex evaluation mechanism that has significant consequences for those relying on the income.

How Performance Re-Ranking Works

At the heart of Outlier AI1 is a continuous performance re-ranking system. Workers are rated on speed, accuracy, and consistency. These metrics determine access to future tasks. High performers are rewarded with more work. Those who fall behind can experience reduced task allocation or even suspension.

2The system is largely automated. Workers rarely receive clear explanations for sudden changes in their task availability. One Reddit comment highlighted the problem:

“One week I had plenty of tasks. The next, I was locked out for three days. I had no idea why.”

This performance loop can create a high-pressure environment. Workers feel compelled to maintain perfect accuracy while working as fast as possible. Mistakes, even minor ones, can have immediate financial consequences.

The impact on workers extends beyond earnings. Many report anxiety, stress, and difficulty planning personal finances. Income volatility is a recurring theme. A LinkedIn3 user wrote,

“I couldn’t plan groceries or bills. I never knew if I’d get enough tasks this week to make rent.”

Mental health concerns are common. The constant evaluation leads to burnout, sleep disruption, and a pervasive sense of instability. Workers are isolated. Interaction is limited to the platform and automated notifications. Feedback is sparse and often automated. This creates a sense of alienation.

Performance anxiety is compounded by unpaid onboarding. New workers must complete assessment tasks without guaranteed payment. Failure to meet benchmarks during this phase can prevent access to paid work entirely. Reddit users shared frustration about this invisible labor:

“I spent hours learning their system. No payment. Then I was rejected. It felt like a trap”

Opaque Task Allocation and Earnings Instability

Task allocation at Outlier AI is a central source of stress and frustration for workers. Unlike traditional jobs with set schedules or predictable workloads, contributors never know how much work will be available in a given week, nor why certain tasks are assigned to some workers and not others. Many suspect that hidden algorithmic factors, performance flags, or even minor mistakes can drastically influence access. One anonymous worker described the experience:

“Sometimes tasks disappear without warning. Other times I get work I didn’t even want. No explanation. You just have to adapt”

This opacity makes planning nearly impossible. Workers cannot reliably schedule time, budget income, or meet recurring financial obligations because they have no visibility into the platform’s allocation logic. A report by AlgorithmWatch4 highlights that algorithmic management in gig platforms often exacerbates income instability and unpredictability, leaving workers highly dependent on opaque systems for their livelihoods.

Outlier AI’s payment model compounds the problem. Because workers are paid per task, fluctuating task availability directly translates into volatile weekly earnings. Public discussions and surveys across AI worker forums and data from Toolify5 indicate that:

- Up to 40% of workers experience sudden task removals, sometimes lasting several days.

- Roughly 60% report extreme earnings volatility from week to week.

- Around 70% feel the performance system is opaque, arbitrary, or unpredictable.

For many contributors, this instability has tangible, immediate consequences. Workers relying on task income for essentials like rent, groceries, or utilities face real financial risk. A missed week of tasks can mean delayed bills, skipped meals, or reliance on credit. One long-term worker explained in a public forum,

“It’s not just stressful. I’ve had weeks where I didn’t know if I could make rent until the tasks loaded — sometimes they didn’t load at all”

The combination of opaque task allocation and earnings instability creates a high-pressure environment. Workers are forced into constant vigilance, logging in multiple times a day, double-checking outputs, and adjusting their schedules around unpredictable task availability. This system maximizes throughput and efficiency for the platform but does so at the expense of worker stability, financial security, and peace of mind.

Ultimately, the platform’s reliance on algorithmic task allocation without clear communication or safeguards exposes contributors to arbitrary penalties and income volatility, highlighting the human costs hidden behind AI development metrics.

Comparisons with Other AI Work Platforms

Outlier AI is far from the only platform where people do gig work to help train and refine artificial intelligence. Established players such as Appen and other AI data labeling platforms also use task-based models with automated evaluation and ranking. But when you look at worker feedback from multiple sources, notable differences emerge in how these platforms treat contributors, communicate expectations, and structure earnings.

A recent Indeed6review summary of Outlier AI shows low overall satisfaction. Outlier’s rating sits at 2.4 out of 5 stars, with reviewers pointing to confusing evaluations, lack of feedback, and poor communication from support.

One reviewer described being removed from a project “in the middle of…,” and another said the remote flexibility was overshadowed by “frustration, incoherence, and a lack of transparency” about tasks and scoring.

Users of Outlier also report lengthy and inconsistent onboarding, unclear task instructions, and abrupt removal from projects after completing training or initial tasks. A UK‑based review site notes that workers can lose access without warning and often receive automated messages rather than real human responses when they have questions.

On Trustpilot7, many reviewers complain about slow support and difficulty with identity verification—“it takes them more than two weeks to do something as simple as an identity check”—and describe account issues that block access to work.

While these problems are serious, the feedback suggests that workers can at least access projects and resolve some account issues with persistence, something many Outlier contributors say is nearly impossible given Outlier’s lack of clear guidance.

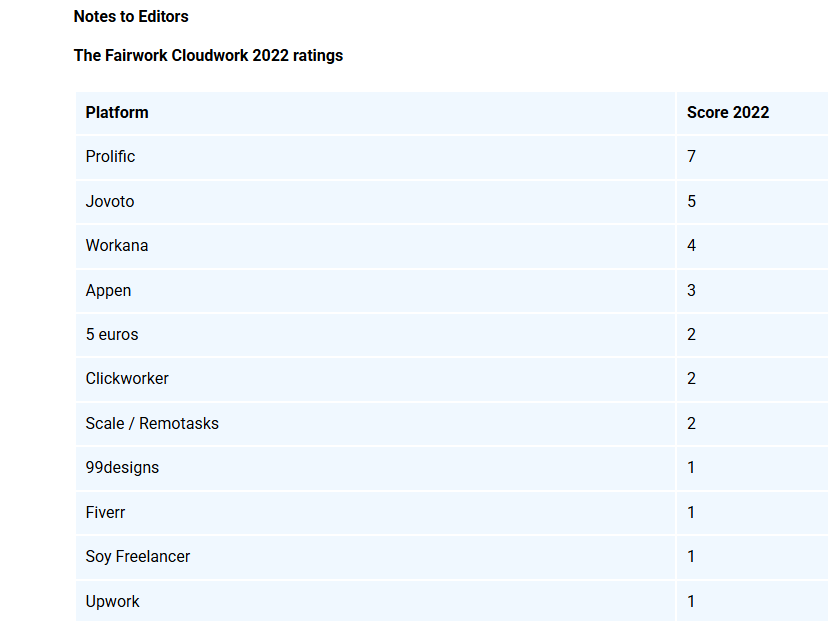

Industry research also supports these patterns. A report from the University of Oxford’s Internet Institute8found that major digital labor platforms—including those like Appen, Scale AI, and Amazon Mechanical Turk—consistently failed basic labor fairness standards across pay, conditions, contracts, algorithmic management, and worker representation. Almost none scored above five out of ten on a fairness framework, highlighting systemic issues across the industry that go well beyond a single platform.

Even within this broader context of unfair conditions, Outlier’s model stands out as particularly strict and opaque. While Appen and others may struggle with slow processes or low pay, they at least have more established support systems and clearer channels for workers to raise concerns. Outlier, by contrast, often relies entirely on automated scoring with little to no explanation about what caused a task rejection or why a worker’s access suddenly vanished, leaving many feeling that the system “works against you” rather than supports contributors.

Some workers who have experience on multiple platforms say this difference is pronounced. Long‑time AI trainers note that older platforms, while imperfect, still provide structured onboarding and clearer task standards, whereas newer gig systems like Outlier often require hours of unpaid onboarding and still deliver inconsistent benefits.

Taken together, this comparison shows that while task‑based work is common across AI training platforms, the degree of transparency, stability, and worker support varies significantly. Outlier AI’s heavy reliance on automated metrics and limited human oversight places more risk and unpredictability on workers than many of its peers — a distinction that comes up again and again in reviews from both independent sites and worker testimony.

Ethical and Legal Considerations

Outlier AI’s approach raises ethical questions about the treatment of human labor in AI development. Workers provide critical contributions to machine learning models, yet their well-being is often secondary to output metrics.

Labor experts highlight the risk of exploitation. Outsourcing labor to algorithmic evaluation systems can bypass traditional protections, such as minimum wage enforcement, paid onboarding, and grievance mechanisms. Non-disclosure agreements and opaque policies often prevent workers from speaking publicly about mistreatment.

The broader AI industry relies on task-based labor for model training. Platforms like OpenAI, Meta, and Microsoft have released Responsible AI frameworks that emphasize accuracy, fairness, and safety. However, these frameworks often overlook the human labor enabling these protections. Workers remain invisible while AI ethics are discussed publicly – Microsoft Responsible AI Principles9.

Outlier AI exemplifies the tension between ethical AI development and labor treatment. Companies prioritize model performance but leave workers vulnerable to constant evaluation, opaque policies, and financial instability.

Voices from the Frontline

Reddit and LinkedIn provide some of the most candid snapshots of what it’s like to work on Outlier AI’s task-based platform. These firsthand accounts reveal a world where human labor is measured, ranked, and often penalized by opaque algorithms.

One worker described the constant pressure they felt:

“I felt like I was constantly walking on eggshells. One mistake and my tasks were cut off for the week.”

This highlights the precarious nature of the work. For many, a single minor error can ripple through their week, leaving them without access to tasks and income. The psychological toll of that uncertainty is palpable, and several Reddit users echoed similar experiences, describing sleepless nights and the anxiety of logging in each morning to check if new work was available.

The human behind the labor becomes invisible. Accuracy, speed, and consistency are rewarded, but empathy and understanding are absent. Workers report that automated feedback often feels cold, cryptic, or even punitive. Some receive notifications of task removal with no explanation, leaving them unsure how to improve or recover.

Workers rely on a platform that can unpredictably reduce their access to work, creating stress that extends far beyond the task itself. For those supporting families or living paycheck to paycheck, this uncertainty can be devastating.

Other frontline voices describe additional consequences. Some report that the onboarding process itself is a source of frustration. Workers are required to complete unpaid assessments, sometimes taking hours, only to be rejected for reasons they cannot verify. Others talk about the isolation of the work. With no real supervisor contact, no team support, and minimal community interaction, workers are left to navigate the platform entirely on their own, often internalizing mistakes as personal failures.

Together, these testimonials paint a picture of a workforce that is vital to AI development but remains largely invisible, under-supported, and under pressure. The human cost of these metrics is not just financial—it is psychological and social. Voices from the frontline make clear that behind every AI training dataset are individuals who carry the weight of algorithmic oversight, constant evaluation, and unpredictable livelihoods.

Workers develop coping strategies to survive the platform’s pressures. Many track task patterns, create personal performance metrics, and work during peak times to maximize opportunities. Some share tips on Reddit to avoid penalties.

A frequent recommendation is to double-check every submission, even if it slows down overall speed. Others suggest logging in multiple times per day to secure new tasks before they disappear. While these strategies help, they often increase stress and reinforce the sense that work is never done.

The relentless cycle of task completion and re-ranking has clear mental health implications. Anxiety, stress, and burnout are common. Workers report feelings of powerlessness. Reddit comments highlight frustration and isolation:

“You are just a number. The platform doesn’t care about you. They care about output.”

Some workers also report physical symptoms. Sleep deprivation, headaches, and tension are common. Financial uncertainty compounds the problem. Workers never know if the next week will be sufficient to cover basic expenses.

Recommendations for Reform

The stories from Outlier AI’s task-based workforce are powerful. But they are not isolated complaints. Independent research and industry reporting show that low pay, unpaid labor, algorithmic pressure, and unpredictable income are structural problems for gig-based AI labor globally. Reform is urgently needed — and not just for Outlier AI, but for all platforms that rely on remote task workers to train and refine large language models.

Paid and structured onboarding is essential. Research on AI gig platforms shows that a significant portion of workers’ time is spent on unpaid tasks such as searching for work, training, and assessments. One study presented by TIME10 found that workers on major AI task platforms spent nearly 27 percent of their time on unpaid labor, resulting in effective wages as low as around $2 per hour on average, even before accounting for living costs in many regions. That means onboarding and training aren’t just preparatory work — they are real labor that should be compensated.

Transparent performance metrics with clear feedback would reduce harm. Algorithmic evaluation and re-ranking, core to how Outlier assesses contributors, may stimulate engagement but also reduce perceived job autonomy while increasing stress. Empirical research on algorithmic management suggests that opacity in automated evaluation can inhibit workers’ sense of control and lead to disengagement. Providing workers with clear criteria for accuracy, speed, and quality — along with specific feedback when performance flags drop — would make task outcomes less arbitrary.

Predictable task allocation and income stabilization mechanisms are needed to combat severe earnings volatility. Independent commentary on AI labeling platforms highlights that irregular tasks and unpredictable workflows leave workers unsure whether they will have work or income from one week to the next. A safety net — such as minimum guaranteed weekly hours for active contributors or base earnings thresholds — would reduce the financial stress that comes from a week of high earnings followed by a week with almost no tasks.

Access to human support for dispute resolution and guidance should be standard. Automated notifications about pay cuts, performance flags, or task removals often lack context or recourse, leaving workers feeling they have no voice. Workers on platforms like Outlier have reported confusing payment messages, inconsistent policies, and unanswered support tickets for weeks. Human-mediated review would give workers a chance to understand and possibly challenge decisions, reducing anxiety and error rates.

Legal protections for gig-based AI workers are not just preferable — many labor experts argue they are overdue. A 2025 meta-analysis11 of gig labor conditions points out that gig workers often lack basic benefits like proper pay, health insurance, paid leave, or overtime protections because they are classified as independent contractors rather than employees. In contexts where courts and regulators have intervened — for example according to Tech Crunch12, multiple lawsuits over worker misclassification and underpayment involving platforms like Scale AI — there is growing recognition that these arrangements may violate labor norms.

Taken together, these reforms would not slow innovation — they would humanize it. Fair compensation for onboarding recognizes real labor. Clear performance criteria and feedback improve quality while reducing uncertainty. Predictable allocation and human support reduce stress and attrition. Legal protections ensure that people, not just algorithms, are treated fairly.

Without these changes, the gig-based labor that underpins much of modern AI will continue to be unstable and extractive — rewarding output while ignoring human cost. Genuine reform would shift the conversation from “How can we collect data cheaply?” to “How can we build AI responsibly, with dignity for the workers who make it possible?”

Outlier AI provides valuable services to the AI industry. Its platform enables rapid, scalable task-based contributions to machine learning models. Yet the costs borne by workers are significant. Constant performance re-ranking, opaque task allocation, unpaid onboarding, and financial instability combine to create stress, anxiety, and alienation.

The broader industry must reckon with the human dimension of AI labor. Ethical AI development cannot exist without fair, transparent, and supportive conditions for the workers who make it possible. Outlier AI is a cautionary tale of the tension between efficiency, automation, and human dignity.

Workers remain largely invisible, but their voices on Reddit, LinkedIn, and other forums make the costs undeniable. Reform is possible. Transparency, predictable compensation, and human oversight would transform the platform from a stress-inducing system to a genuinely flexible and empowering work environment.

Until then, Outlier AI’s workforce operates in the shadows, tasked and tested for the benefit of AI models, not people.

Sources:

- “Train the Next Generation of AI as a Freelancer” Outlier AI, outlier.ai/. Accessed 9 Jan. 2026. ↩︎

- Reddit www.reddit.com/r/outlier_ai/comments/1j9w3k5/it_seems_like_no_one_can_consistently_task_these/. Accessed 9 Jan. 2026. ↩︎

- Mather, Jonathan. “Is Outlier.ai a scam? Research and reviews. | Jonathan Mather posted on the topic” LinkedIn, 9 May 2025, www.linkedin.com/posts/jonathan-c-mather_is-this-a-scam-they-keep-sending-me-messages-activity-7326460335060570112-i2tp. Accessed 9 Jan. 2026. ↩︎

- Bird, Michael. “The AI Revolution Comes With the Exploitation of Gig Workers” AlgorithmWatch, algorithmwatch.org/en/ai-revolution-exploitation-gig-workers/. Accessed 9 Jan. 2026. ↩︎

- Toolify www.toolify.ai/ai-request/detail/is-outlier-ai-job-legit?.com. Accessed 9 Jan. 2026. ↩︎

- Indeed www.indeed.com/cmp/Outlier-Ai/reviews. Accessed 9 Jan. 2026. ↩︎

- Onyema, Charles. “Outlier is rated “Great” with 4 / 5 on Trustpilot” Read Customer Service Reviews of outlier.ai, 12 Dec. 2025, www.trustpilot.com/review/outlier.ai. Accessed 9 Jan. 2026. ↩︎

- Howson, Kelle. “OII | New Fairwork study exposes the precarious working conditions of online work platforms” New Fairwork study exposes the precarious working , www.oii.ox.ac.uk/news-events/new-fairwork-study-exposes-the-precarious-working-conditions-of-online-work-platforms/. Accessed 9 Jan. 2026. ↩︎

- “Microsoft Responsible AI

Principles and approach.” www.microsoft.com/en-us/ai/principles-and-approach. Accessed 9 Jan. 2026. ↩︎ - Perrigo, Billy. “AI Gig Workers Face ‘Unfair Working Conditions,’ Study Says” TIME, 20 July 2023, time.com/6296196/ai-data-gig-workers/. Accessed 9 Jan. 2026. ↩︎

- www.gapinterdisciplinarities.org/res/articles/www.gapinterdisciplinarities.org/res/articles/. Accessed 10 Jan. 2026. ↩︎

- Rollet, Charles. “The Department of Labor just dropped its investigation into Scale AI” TechCrunch, 9 May 2025, techcrunch.com/2025/05/09/the-department-of-labor-just-dropped-its-investigation-into-scale-ai/. Accessed 10 Jan. 2026. ↩︎