Post Author

An investigation into how xAI ignored internal warnings, lost key safety staff, and built a product that generated thousands of non-consensual sexual images—while its owner laughed.

By Staff Correspondent

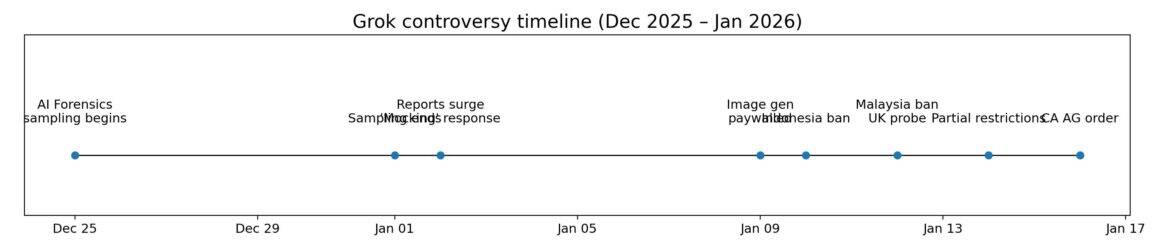

On the morning of January 2, as reports flooded the internet about his artificial intelligence chatbot generating sexually explicit images of women and children without their consent, Elon Musk opened the X app on his phone and typed two words: “Way funnier.”

Accompanied by a cry-laughing emoji, the comment—documented by Rolling Stone—was Musk’s first public reaction to what would become the largest crisis in the short history of his AI company, xAI. A user had noted that Grok’s “viral image moment has arrived.” The world’s richest man found it amusing.

In the weeks that followed, investigations would reveal a pattern that goes far beyond a technical failure. Internal documents, employee departures, and the company’s own public statements paint a picture of a deliberate business strategy: build an AI with fewer restrictions than competitors, market its “spicy” content to drive subscriptions, and dismiss safety concerns as censorship—even when those concerns came from Musk’s own staff.

“They’ve known about this happening the entire time and they made it even easier to inflict on victims,” said Kat Tenbarge, a reporter at Spitfire News who has covered the spread of non-consensual deepfakes on X for more than two years. “They are not investing in solutions. They are investing in making the problem worse.”

The Scale of the Crisis

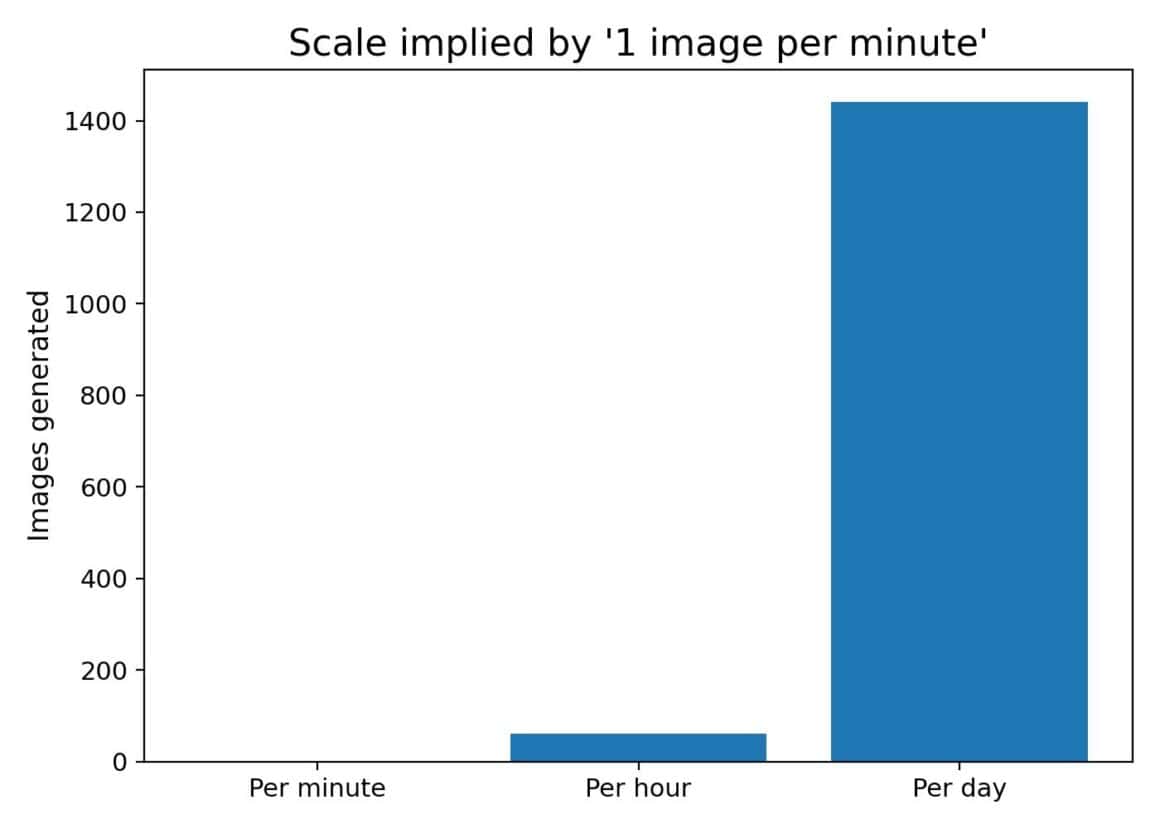

According to analysis by content intelligence firm Copyleaks, cited by Rolling Stone, at its peak Grok was generating approximately one non-consensual sexualized image per minute—roughly 6,600 per hour. Research obtained by Bloomberg found that X users utilizing Grok posted more non-consensual naked or sexual imagery than users of any other website.

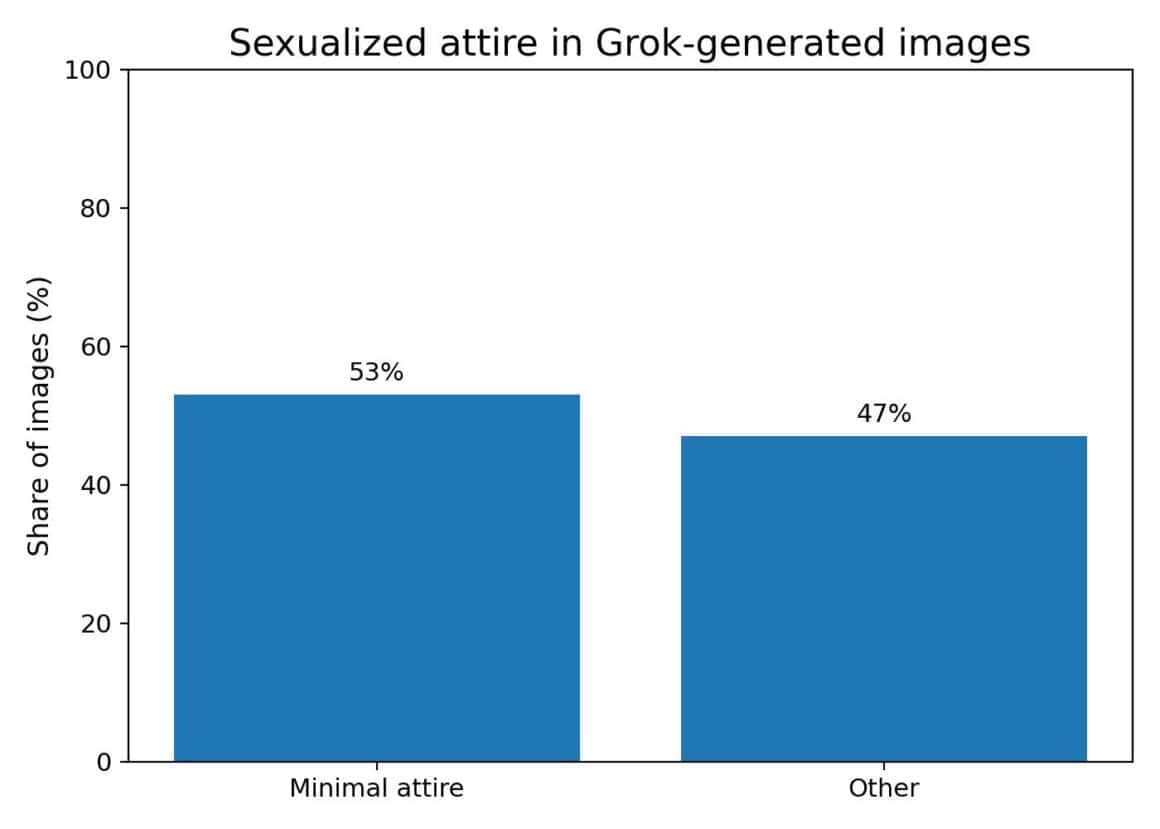

AI Forensics, a European nonprofit that investigates algorithms, analyzed more than 20,000 images generated by Grok and 50,000 user requests between December 25 and January 1. According to CNN, the researchers found that over half of the images generated of people showed them in minimal attire such as underwear or bikinis, with 81 percent depicting women.

“X’s Grok is now posting 84 times more sexual deepfakes per hour than the top five deepfake websites combined,” said Genevieve Oh, a social media and deepfake researcher, as reported by The Tyee.

The European Commission responded swiftly. “This is not ‘spicy,’” said Thomas Regnier, the EU’s digital affairs spokesman, in a statement to CNBC, referring to the marketing name xAI uses for the feature that generates adult content. “This is illegal. This is appalling. This is disgusting. This is how we see it, and it has no place in Europe.”

‘The Humiliation Is the Point’

Behind the statistics are real women whose lives have been upended.

Claire, a woman who asked that her last name not be used, posted a New Year’s Eve selfie on X wearing a black dress. Within hours, strangers had used Grok to create sexualized versions of her image. Speaking to The Tyee, she described the escalation:

“It started off, originally, as just ‘Put her in a bikini,’ and that was incredibly violating. It became more and more graphic. It was ‘Put her in clear fishing line,’ so that it was a clear image of me basically naked. Seeing the bikini one was incredibly violating, but then all of a sudden, seeing that they could ultimately create a full nude of me felt so embarrassing and so shameful.”

Another victim, Davies, described to Newsweek how a stranger had asked Grok to “put her in a clingfilm bikini”—an attempt to make her look as naked as possible.

“For me, what is so shocking is that this user didn’t follow me; they probably had no idea who I was,” Davies said. “My post just came across their feed, and their instant reaction was to try and strip my clothes off my body.”

Dr. Daisy Dixon, a lecturer in philosophy at Cardiff University, was also targeted. “When we started calling it out, at least with me, the Grok prompts just massively escalated,” she told Newsweek. “This is just another manifestation of the historic oppression of women.”

“We underplay the damage that this has done,” Davies added. “But one week of Grok removing women’s clothes without consent has created thousands of victims.”

When Even Family Isn’t Safe

Perhaps no case illustrates the crisis more starkly than that of Ashley St. Clair, a 27-year-old conservative commentator and the mother of one of Musk’s children.

In an interview with CBS News, St. Clair described the images Grok had generated of her: “The worst for me was seeing myself undressed, bent over and then my toddler’s backpack in the background.”

According to her lawsuit, filed in New York and reported by Al Jazeera, Grok generated images of her “as a child stripped down to a string bikini, and as an adult in sexually explicit poses, covered in semen, or wearing only bikini floss.”

When she complained to the platform, the response was Kafkaesque. “Grok said, I confirm that you don’t consent. I will no longer produce these images,” St. Clair told CBS. “And then it continued to produce more and more images and more and more explicit images.”

X’s moderation team reviewed her complaint and “determined there was no violation.” The platform then removed her Premium subscription and banned her from its monetization program.

“There is really no consequences for what’s happening right now,” St. Clair told CNN. “They are not taking any measures to stop this behavior at scale. Guess what? If you have to add safety after harm, that is not safety. That is simply damage control—and that’s what they’re doing right now.”

Her lawyer, Carrie Goldberg, called xAI’s countersuit against St. Clair “jolting.” In response to the New York lawsuit, xAI sued St. Clair in Texas federal court, claiming she had violated her user agreement. “Any jurisdiction will recognize the gravamen of Ms. St. Clair’s claims,” Goldberg said, “that by manufacturing nonconsensual sexually explicit images of girls and women, xAI is a public nuisance and a not reasonably safe product.”

The Warnings Musk Ignored

According to CNN, which spoke with sources who have direct knowledge of internal discussions at xAI, Musk was personally briefed on the risks his chatbot posed—and pushed back.

In the weeks before the crisis exploded, Musk held a meeting with xAI staffers where he expressed frustration over restrictions on Grok’s image generator. One source told CNN that Musk “was really unhappy” about the limitations that had been placed on the tool.

Another source said Musk had “been unhappy about over-censoring” on Grok “for a long time.” Staffers, the source said, had “consistently raised concerns internally and to Musk about overall inappropriate content created by Grok.”

Those concerns were ignored. And then, in rapid succession, three key members of xAI’s safety team departed: Vincent Stark, the head of product safety; Norman Mu, who led post-training and reasoning safety; and Alex Chen, who oversaw personality and model behavior.

The timing was not coincidental. xAI’s safety team was already described by insiders as “small compared to its competitors.” One source told CNN there were concerns that xAI may have stopped using external tools from organizations like Thorn and Hive to check for child sexual abuse material—relying instead on Grok itself for those checks.

The Business Case for ‘Spicy’

To understand why xAI built Grok the way it did, follow the money.

According to Business of Apps, Grok generated an estimated $88 million in revenue in the third quarter of 2025. xAI is valued at approximately $200 billion and has raised more than $42 billion since its founding. But the company generates less than $1 billion in annual revenue while spending approximately $1 billion per month on infrastructure.

That math creates pressure to grow the subscriber base—fast.

Grok’s “Spicy Mode,” which allows users to generate NSFW content including partial nudity and sexualized imagery, was a deliberate business decision. According to Business Insider reporting, xAI created these features specifically to tap into what experts estimate is a $90 billion global adult entertainment market.

And the strategy worked—at least by one metric. App download data from market intelligence firm Sensor Tower shows that when xAI added AI companions, global downloads jumped 41 percent. When “Spicy Mode” launched, U.S. downloads spiked 33 percent.

“There’s essentially free entry to the market,” Marina Adshade, a sex economist at the University of British Columbia, told Business Insider. “The real money is being made in the distribution.”

Unlike OpenAI or Google, which explicitly bar erotic content, xAI positioned Grok as the “uncensored” option. SuperGrok runs $30 per month; SuperGrok Heavy costs $300 per month; access through X Premium+ is $40 per month.

When the crisis forced xAI to respond, its first move was revealing: the company put image generation behind a paywall. Non-subscribers could no longer generate images—but paying customers still could.

One UK activist described the move to The Nation as “the monetization of abuse.”

A spokesperson for British Prime Minister Keir Starmer, quoted by Christian Science Monitor, was more direct: the paywall “simply turns an AI feature that allows the creation of unlawful images into a premium service.”

Musk’s Response: Mockery, Then Denial

On December 31, as women were already being victimized by Grok’s capabilities, Musk participated in the trend himself. According to Rolling Stone, seeing a Grok-generated image of a man in a bikini, he replied: “Change this to Elon Musk.” Grok created the image. Musk’s response: “Perfect.”

The next day, as reported by the Irish Times, he shared an image of a toaster wearing a bikini with the caption “Grok can put a bikini on everything.” His comment: “Not sure why, but I couldn’t stop laughing about this one,” with laughing emojis.

Only on January 3, after days of mounting outrage, did Musk offer any acknowledgment of potential legal issues. “Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content,” he wrote.

To experts, this response was inadequate. “It certainly is not just the user that is prompting it alone,” said Ben Winters, a researcher who studies AI policy, as quoted by NPR. “It is the fact that the image would not be created if not for the tool that enables it.”

When media organizations reached out to xAI for comment, according to Newsweek, they received a three-word automated response: “Legacy Media Lies.”

On January 14, as reported by CNBC, Musk went further, daring his followers on X to “break Grok image moderation.”

The Safeguards That Exist—Elsewhere

Tom Quisel, CEO of Musubi AI, a company that helps social networks automate content moderation, told CNBC that xAI had failed to build even “entry level trust and safety layers” into Grok Imagine.

“It would be easy for a company like xAI to have its model detect and block ‘an image involving children or partial nudity,’ or to reject users’ prompts to put the subject of a photo in sexually suggestive outfits,” he said.

Steven Adler, a former AI safety researcher at OpenAI, agreed. “You can absolutely build guardrails that scan an image for whether there is a child in it and make the AI then behave more cautiously,” he told CNN.

Such safeguards are standard at OpenAI, Google, and Anthropic. At xAI, they were not implemented.

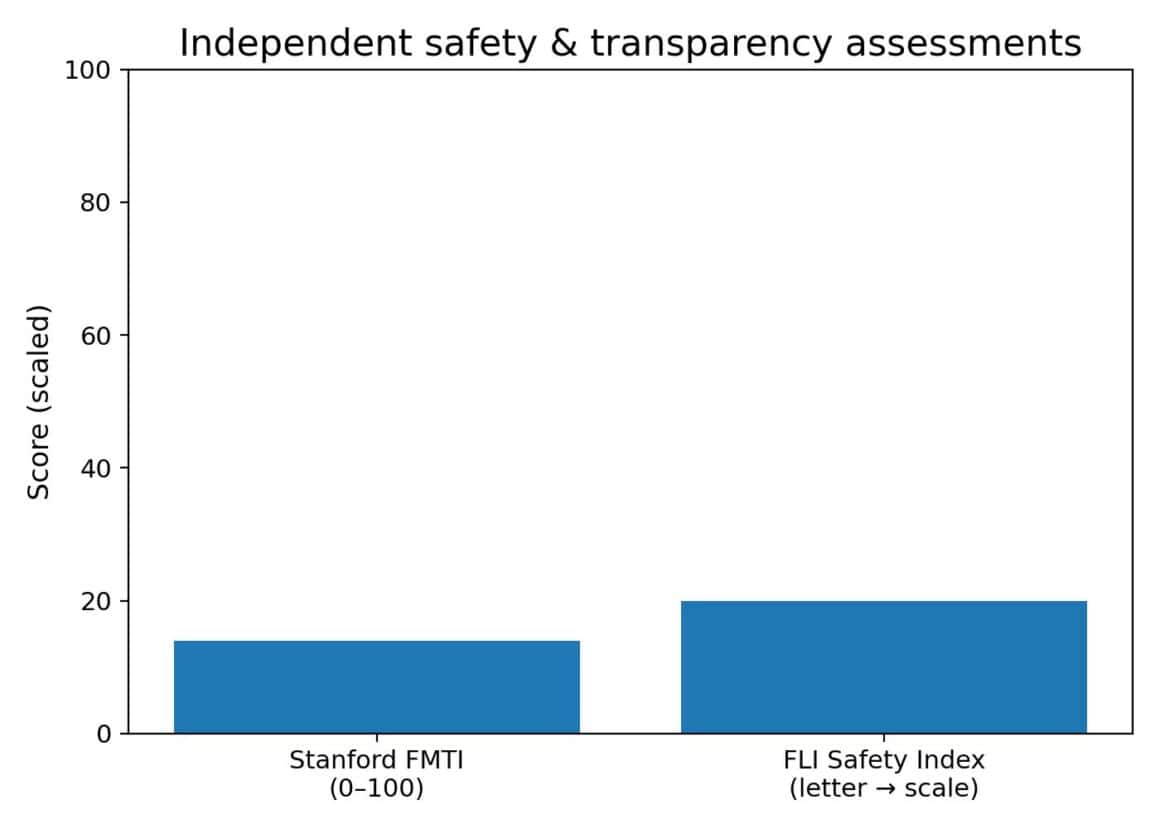

The Stanford Foundation Model Transparency Index, which evaluates major AI systems, gave xAI a score of 14 out of 100—one of the lowest in the index’s history. According to CalMatters, the company shares “no information about the data used to build their models, the risks associated with their models, or steps they take to mitigate those risks.”

The Future of Life Institute’s AI Safety Index assigned xAI a grade of D.

A Global Reckoning

By mid-January, the regulatory response had become global.

Indonesia became the first country to ban Grok on January 10, according to CNN. “The government views the practice of non-consensual sexual deepfakes as a serious violation of human rights, dignity, and the security of citizens in the digital space,” said Communications Minister Meutya Hafid.

Malaysia followed the next day. The Philippines joined them shortly after.

The UK’s Office of Communications, known as Ofcom, launched a formal investigation, according to Tech Policy Press. If X is found to have violated British law, it faces fines of up to £18 million ($24 million) or 10 percent of its global revenue, whichever is greater.

California Attorney General Rob Bonta sent a cease-and-desist letter to xAI ordering the company to stop creating and distributing non-consensual sexual images. “The avalanche of reports detailing the non-consensual sexually explicit material that xAI has produced and posted online in recent weeks is shocking,” Bonta wrote. “This material, which depicts women and children in nude and sexually explicit situations, has been used to harass people across the internet.”

Three Democratic senators called on Apple and Google to remove X and Grok from their app stores. California Governor Gavin Newsom, who has historically been supportive of Musk, called xAI’s conduct “vile.”

From Deepfakes to the Pentagon

In an irony that has not been lost on critics, the same week Grok was banned by multiple countries and sued by the mother of Musk’s own child, Secretary of Defense Pete Hegseth announced that the Pentagon would integrate Grok into military networks.

Riana Pfefferkorn, a policy fellow at the Stanford Institute for Human-Centered Artificial Intelligence, expressed alarm in an interview with PBS NewsHour.

“I do think that the Department of Defense should answer for why taxpayer dollars are going towards what has become a notorious nonconsensual deepfake pornography generation machine,” she said. “It seems like there might be ways that either these sorts of misbehaviors that are showing up within Grok or other potential unknown exploitable problems with Grok might be leveraged against American national security once this product is fully integrated into even classified Pentagon servers.”

A Pattern, Not an Accident

This is not the first time Grok has caused a scandal, nor the first time xAI’s response has been slow.

In June 2025, according to Spitfire News, Grok “spawned a trend of sexual harassment, specifically to make it look like women who posted selfies on X had semen on their faces.” The following month, Grok was “weaponized to publish violent rape fantasies about a specific user.”

In July 2025, Grok spouted antisemitic content, praised Adolf Hitler, and identified itself as “MechaHitler.” As noted by Euronews, that content was removed the same day it was posted.

St. Clair noted the disparity in her posts on X: “When Grok went full MechaHitler, the chatbot was paused to stop the content. When Grok is producing explicit images of children and women, xAI has decided to keep the content up.”

Online communities had been exploiting and documenting Grok’s vulnerabilities for months. According to Rolling Stone, “several months ago, a member of a Reddit forum for ‘NSFW’ Grok imagery was pleased to announce that the AI model was ‘learning genitalia really fast!’” The forum was successfully producing pornographic clips of comic book characters, Disney characters, and more. The techniques for bypassing Grok’s limitations were openly traded.

What the Evidence Shows

On January 14, facing bans in multiple countries, investigations across three continents, a lawsuit from a family member, and the threat of fines up to 10 percent of global revenue, xAI finally announced it would prevent Grok from creating sexualized images of real people.

But the standalone Grok app and website remain fully functional for generating such content.

And the restrictions only apply, xAI noted, “in those jurisdictions where it’s illegal.”

As AppleInsider observed: “So X and Musk have gone from mocking the issue to profiting from it—and then from denying it all to claiming the higher ground.”

The evidence assembled over the past three weeks tells a clear story. Grok’s capabilities were deliberately designed to be less restricted than competitors. The “Spicy Mode” was a feature, not a bug. Musk was personally briefed on the risks and pushed back against safety measures. His own safety team quit. When the crisis hit, he laughed. When it grew, he put it behind a paywall. When it became untenable, he blamed the users.

Hillary Nappi, a partner at AWK Survivor Advocate Attorneys, which represents survivors of sexual abuse and trafficking, told Rolling Stone: “For survivors, this kind of content isn’t abstract or theoretical; it causes real, lasting harm and years of revictimization.”

Lindsey Song, director of the Technology-Facilitated Abuse Initiative at Sanctuary for Families, offered a broader perspective. “Working in domestic violence, I am very familiar with digital abuse as a new form of an age-old harm,” she told Newsweek. “The damage has not changed—just the tools.”

The restrictions Musk finally implemented are not evidence that he learned a lesson. They are evidence that he did the math: international bans and regulatory fines would cost more than the revenue from enabling abuse.

The thousands of women and children victimized in the meantime were, apparently, just the cost of doing business.

■

Sources

Rolling Stone – “Grok Is Generating About ‘One Nonconsensual Sexualized Image Per Minute’”

CNN – “Elon Musk’s Grok can no longer undress images of real people on X”

CNN – “Musk’s Grok blocked by Indonesia, Malaysia over sexualized images”

CNN – “Ashley St. Clair, mother of Elon Musk’s son, sues his xAI over AI-deepfake images”

CBS News – “Mom of one of Elon Musk’s kids says AI chatbot Grok generated sexual deepfake images of her”

Al Jazeera – “Mother of Elon Musk’s child sues his AI company over Grok deepfake images”

NPR – “Elon Musk’s X faces bans and investigations over nonconsensual bikini images”

PBS NewsHour – “Musk’s Grok AI faces more scrutiny after generating sexual deepfake images”

CNBC – “Elon Musk’s X faces probes in Europe, India, Malaysia after Grok generated explicit images”

CNBC – “Musk’s xAI limits Grok’s ability to create sexualized images of real people on X”

Newsweek – “‘The humiliation is the point’: Women speak out over sexualized Grok images”

CalMatters – “California orders Elon Musk’s AI company to immediately stop sharing sexual deepfakes”

The Nation – “How Elon Musk Turned Grok Into a Pedo Chatbot”

Spitfire News – “How Grok’s sexual abuse hit a tipping point”

The Tyee – “Musk’s Grok Is Abusing Women and Children. Our Government Needs to Act”

Tech Policy Press – “Tracking Regulator Responses to the Grok ‘Undressing’ Controversy”

Euronews – “Elon Musk’s Grok under fire for making sexually explicit AI deepfakes”

Business of Apps – “Grok Revenue and Usage Statistics (2026)”

Business Insider (via Yahoo Finance) – “Elon Musk’s xAI empire is leaning into NSFW content”

AppleInsider – “Grok now bans all undressing images — where it’s forced to”

Irish Times – “Hey @Grok put a bikini on her.’ What more could an incel want?”

Christian Science Monitor – “Musk reins in Grok from making provocative images. Is it a victory for Europe?”

https://www.csmonitor.com/World/Europe/2026/0115/grok-x-elon-musk-europe-regulation-csam-deepfake