Post Author

12 Million Channels Terminated. AI Making Life-or-Death Decisions for Creators. And a Platform That Claims Everything Is Fine.

- The Numbers Don’t Lie (But They Don’t Tell the Whole Truth Either)

- “Human Here”: The Great Moderation Charade

- The SplashPlate Paradox: Punishing the Victim

- The Two-Tier System: Who Gets a Human, Who Gets the Bot

- Neal Mohan’s AI Optimism (And Why Creators Aren’t Buying It)

- What Happens Now?

- The Bottom Line

- Sources

Here is a number that should make every YouTube creator nervous: 12,460,248.

That is how many channels YouTube terminated between January and September 2025. Can you imagine twelve million channels? All Gone. In nine months!

The platform wants you to believe these were all bad actors. Scammers from Southeast Asia. Spam accounts. Content thieves. And sure, some of them probably were. But buried in that mountain of terminations are legitimate creators who woke up one morning to find their life’s work erased. Their appeals were rejected in minutes. Their income streams were severed without warning or explanation.

I have spent weeks digging through this story, talking to affected creators, reading through transparency reports, forum posts, and angry Twitter threads. What I found is troubling. Not because YouTube is moderating content, they have to do that, but because of how they are doing it. And who gets protected versus who gets thrown to the algorithms.

The Numbers Don’t Lie (But They Don’t Tell the Whole Truth Either)

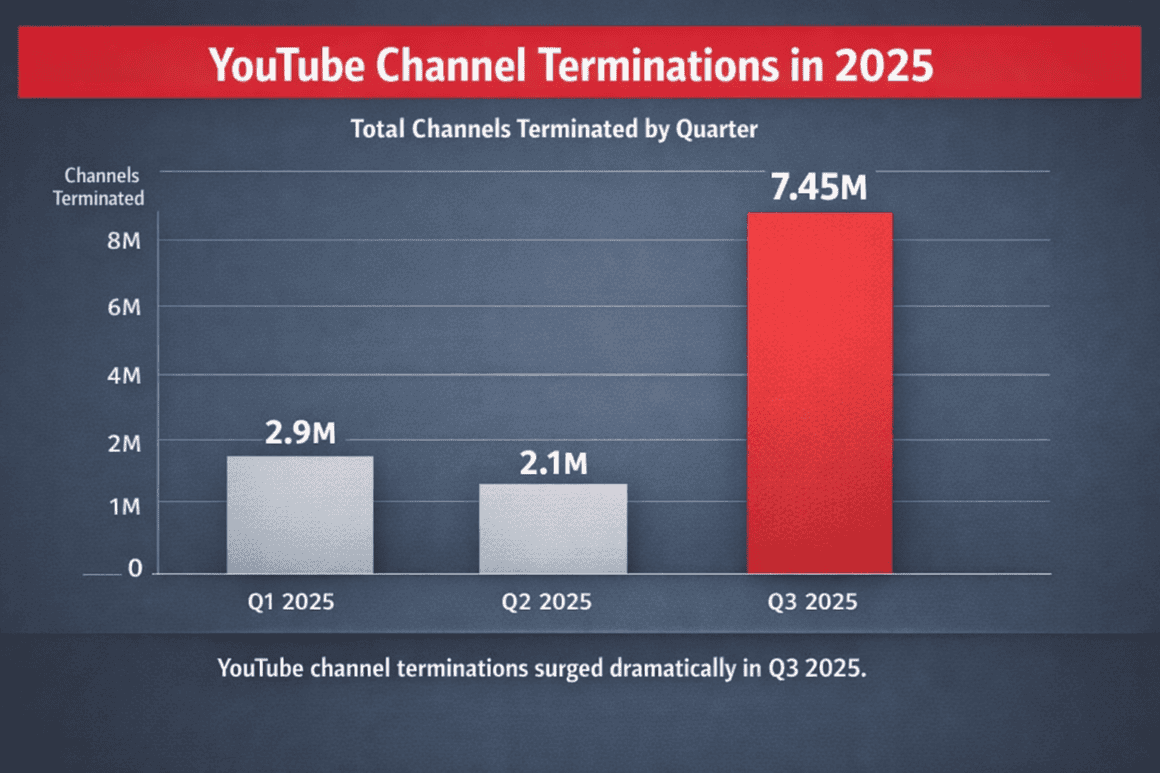

Let me break down what YouTube’s own transparency reports show. In Q1 2025, the platform removed 2,897,659 channels. Q2 saw 2,105,778 more go. Then Q3 hit like a freight train: 7,456,811 channels terminated in just three months.

That is nearly 81,000 channels every single day.

Now, YouTube will tell you (and they have) that this is actually down from previous periods. TeamYouTube responded on X to animator Nani Josh, who had been calling out these numbers: “You’re right that over 12 million channels have been terminated this year, but it varies. For example, 20.5M channels were terminated in Q4 2023 alone.”

And that is technically accurate. But it misses the point entirely.

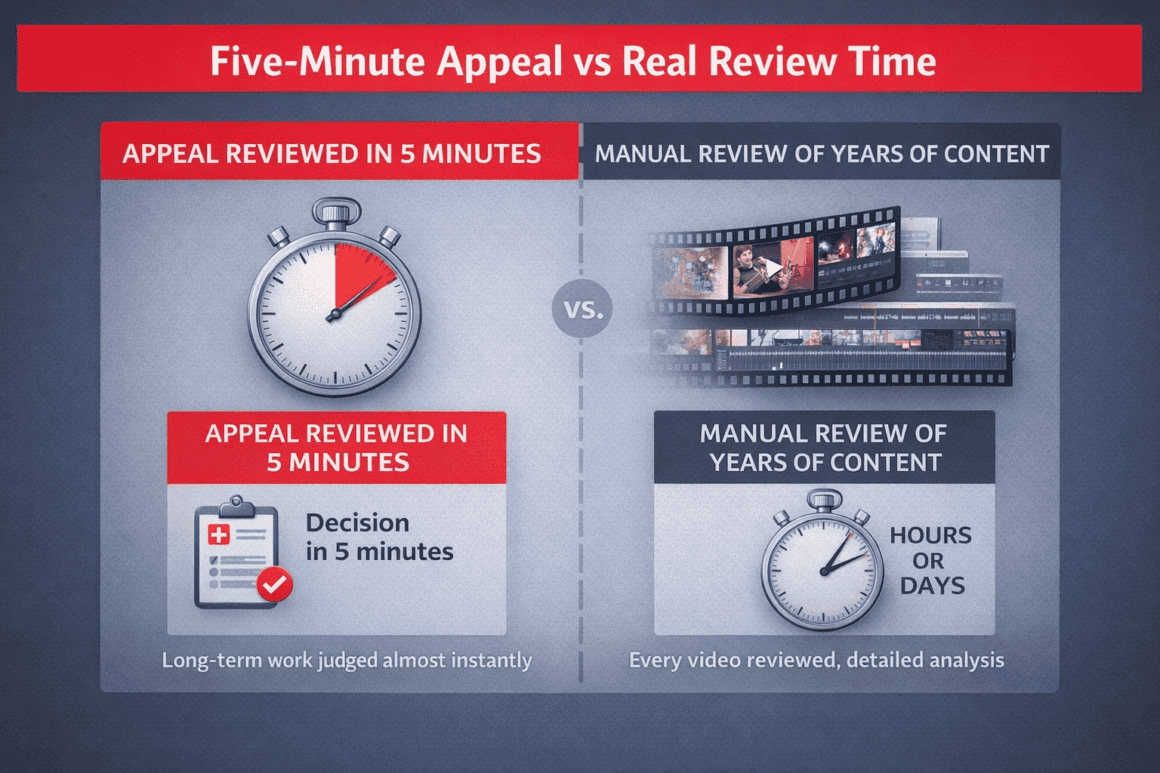

The issue is not the raw numbers. The issue is that somewhere in those millions of terminations are creators like Nani Josh himself. A guy who ran a 650,000-subscriber animation channel woke up one day to find it deleted for “spam and scam” content. His appeal? Rejected in five minutes.

Yes, In Just Five minutes.

Think about that. Nani Josh had years of original animation on that channel. Hand-drawn frames. Hours and hours of creative work. To properly review even a fraction of it would take hours. Days, maybe. But his appeal got processed in five minutes and came back rejected.

As Josh asked on X: “Are we really supposed to believe 12 million creators all violated policies? Did every one of them get a fair human review… or is YouTube’s AI just wiping out channels whenever it feels like it?”

I think we all know the answer.

“Human Here”: The Great Moderation Charade

YouTube has consistently claimed that appeals are manually reviewed by human staff. TeamYouTube stated on November 8, 2025, that “appeals are manually reviewed, so it can take time to get a response.”

But creators have been documenting something very different.

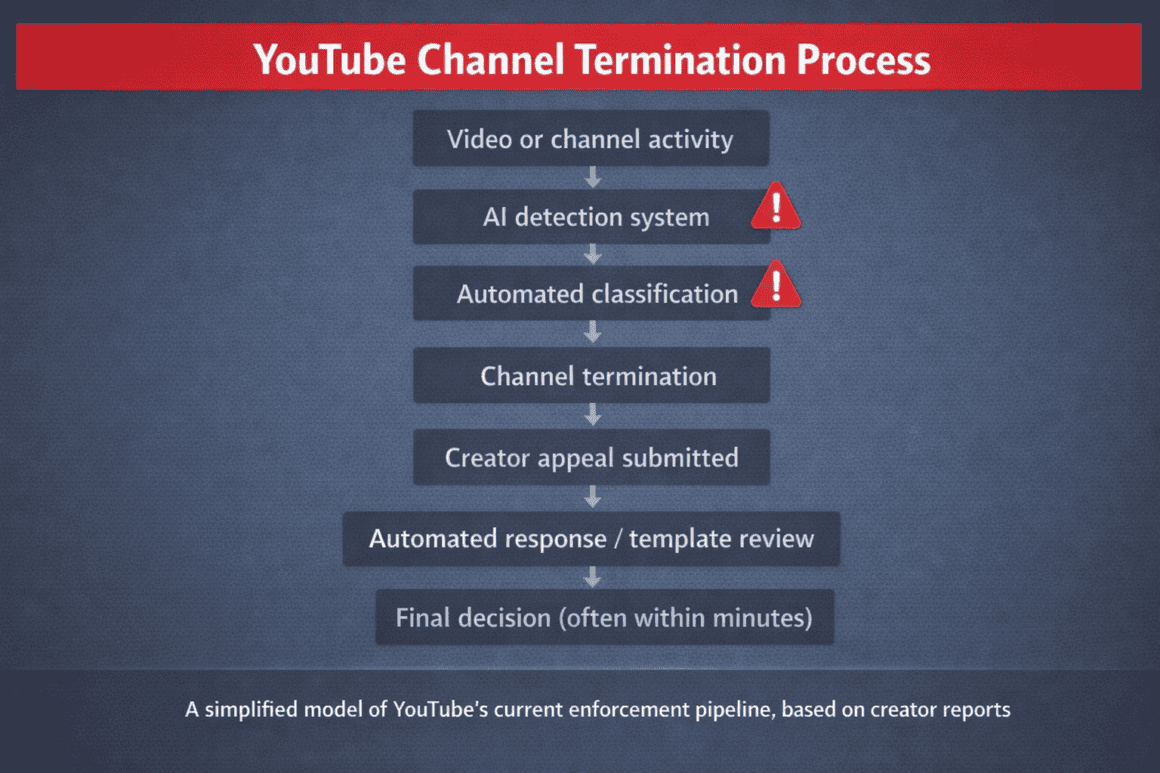

The most compelling evidence came from Enderman, a tech YouTuber with over 350,000 subscribers who runs a channel focused on Windows customization and malware testing. In early November 2025, Enderman’s channel was terminated. YouTube claimed his account was linked to a Japanese channel that had received copyright strikes.

The problem? Enderman had never heard of this Japanese channel. He did not know what language its name was even written in.

“It wrongfully concluded my channel was associated with a channel that was terminated for three copyright strikes,” Enderman explained in a video posted before his main channel went dark. “I had no idea such drastic measures as channel termination were allowed to be processed by AI and AI only.”

But here is where it gets really interesting. After his termination, Enderman started digging into how YouTube’s support actually operates. What he found shook the creator community to its core.

On November 7, 2025, Enderman posted on X: “YouTube is openly lying about a human being on the other side of the screen. You can verify whether it’s an actual human from the YouTube team or AI talking to you by looking at the tweet source device. Sprinklr is the AI agent they’re using to seed false hope into you.”

Sprinklr Care is a customer relationship management tool that includes AI-powered automation features. Enderman and other creators noticed that responses from the official @TeamYouTube account were being sent through this tool. The inference was damning: if the support response is coming from a known automation platform, then the “manual review” YouTube promises might be anything but.

According to PiunikaWeb’s reporting, another creator named @peachy highlighted a “human here” response from TeamYouTube that was immediately followed by an em dash.

And here is where my own experience becomes relevant: as someone who writes for a living and has spent considerable time working with large language models, I can tell you that LLMs love em dashes. They default to them constantly. It is one of the telltale signs of AI-generated text.

YouTube has acknowledged using Sprinklr but defended its use, arguing that the tool is primarily for message routing and that human agents still make decisions. As PiunikaWeb reported, YouTube’s defense is that Sprinklr “aggregates and manages the massive influx of messages” while providing agents with “pre-approved templates or ‘snippets’ to speed up responses.”

But here is my question: if your appeal gets rejected in five minutes after you submitted years of content for review, does it really matter whether a human technically clicked the “reject” button?

The outcome is the same. No one actually looked at your work.

The SplashPlate Paradox: Punishing the Victim

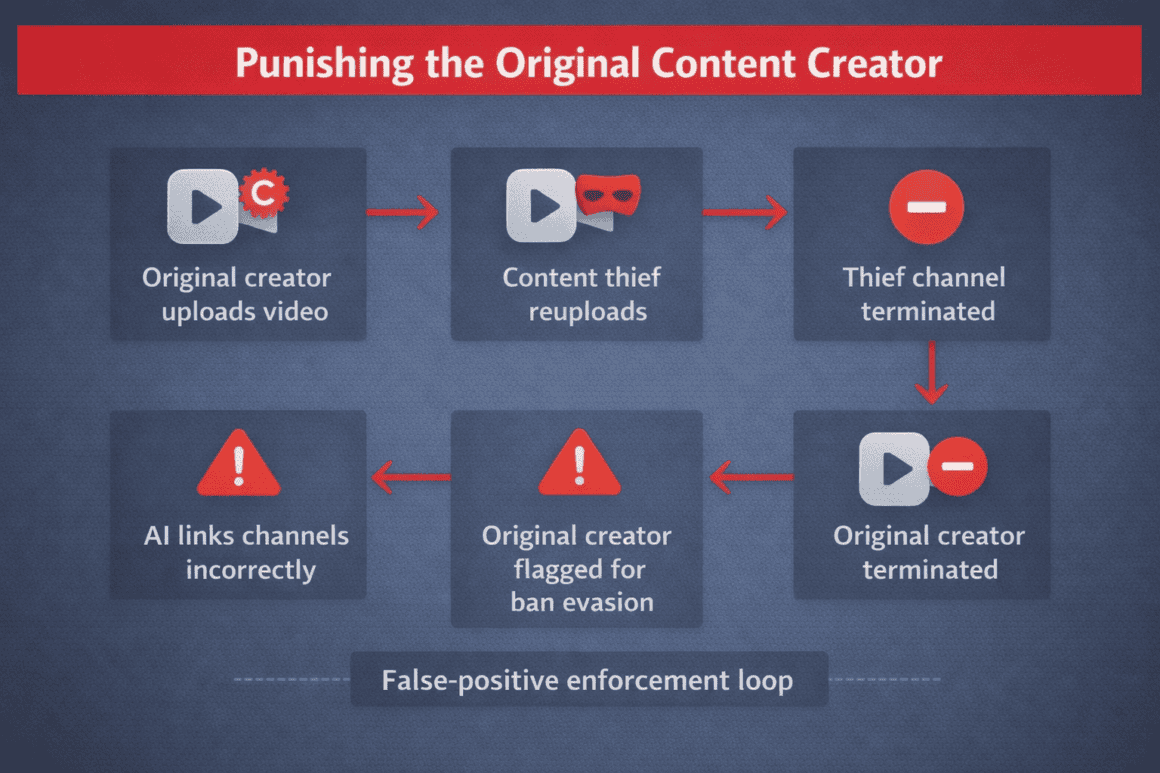

If you want to understand just how broken YouTube’s moderation has become, look no further than what happened to SplashPlate, a Pokémon content creator.

On December 9, 2025, SplashPlate posted on X: “YouTube Terminated my channel because SOMEBODY STOLE MY CONTENT. Somebody stole a video that I created here and posted on YouTube, they got Terminated, and YouTube thinks that I have reuploaded THEIR content, EVEN THOUGH IT IS MINE AND WATERMARKED.”

Read that again. A channel called EvolutionArmy had stolen SplashPlate’s video, complete with his watermark still visible on the footage. That channel got terminated for the theft, as it should have been. And then YouTube’s AI apparently decided that SplashPlate, the original creator, was the one evading a ban.

His channel got nuked for owning his own content.

When SplashPlate appealed, he got a response that showed TeamYouTube had not actually looked at his case. He posted: “They didn’t read the tweet, they didn’t watch the video. They still believe I’m circumventing a ban. I haven’t done anything wrong. I would give ANYTHING to be able to just talk to somebody about this as opposed to these automated responses.”

This is a creator who did everything right. Made original content. Watermarked it properly. And got punished because someone else stole from him.

MoistCr1TiKaL, one of the platform’s biggest creators with over 17 million subscribers, weighed in: “You punished the real person, not the imposter. The AI tools are getting better every week, huh?”

The sarcasm was well-earned.

SplashPlate’s channel was eventually reinstated on December 10, 2025, after the story went viral. YouTube acknowledged his channel was “not in violation” of Terms of Service and thanked him for his patience.

But here is the thing that keeps me up at night: how many SplashPlates are out there that didn’t go viral? How many creators got their channels terminated for content that was stolen FROM them, and never got the attention needed to get reinstated?

As SplashPlate wrote after getting his channel back: “This doesn’t end with me. Hundreds of people are being terminated DAILY due to similar errors. More people need our help. Don’t stop pushing.”

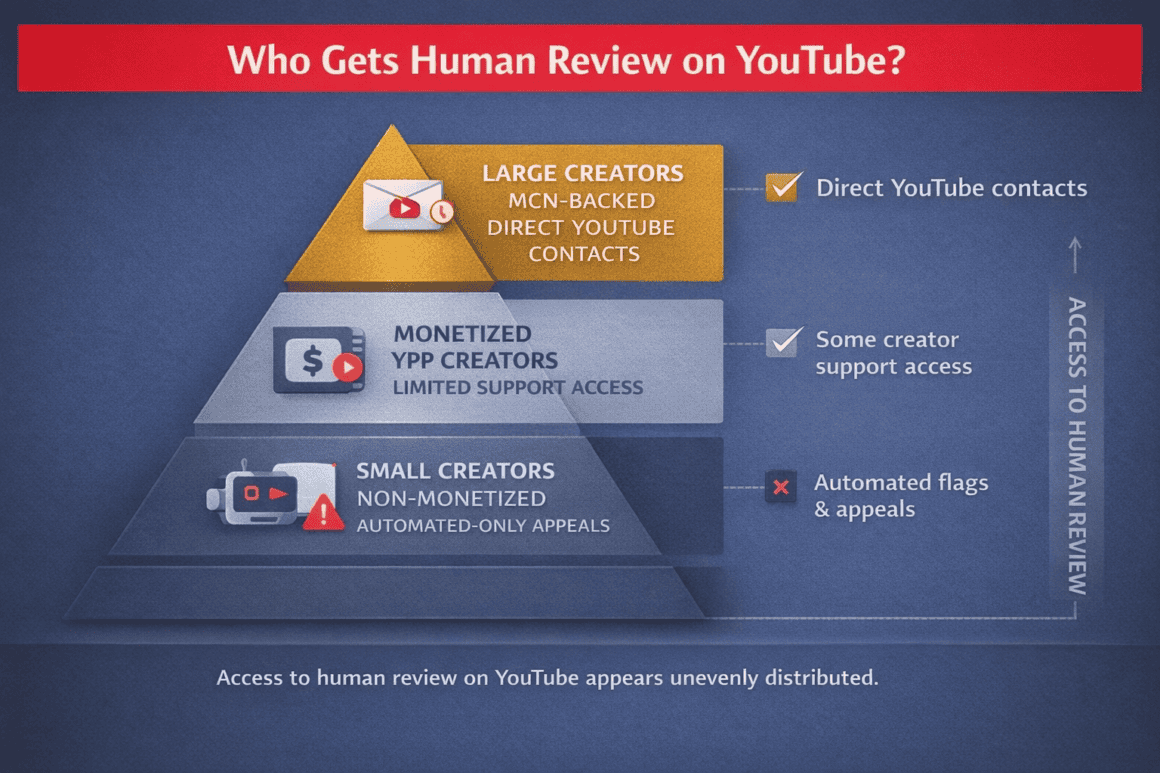

The Two-Tier System: Who Gets a Human, Who Gets the Bot

There is a pattern emerging from all these cases, and it is not a pretty one.

The creators who get reinstated tend to share certain characteristics: they have large followings, they go viral on social media complaining about their terminations, and they often have connections to larger Multi-Channel Networks. Enderman, with 350,000+ subscribers, got reinstated after his case blew up. SplashPlate got reinstated after MoistCr1TiKaL highlighted his situation to millions of viewers. Nani Josh made headlines across gaming news sites.

These creators had platforms to fight back with.

But what about the creator with 500 subscribers who gets wrongly terminated? What about the person building a channel in their spare time, uploading videos after their kids go to bed, who does not have connections to big YouTubers or the know-how to create a viral Twitter thread? What happens to them?

I think we all know the answer already. They simply disappear. Quietly. Into that mountain of 12 million terminations.

As the legal analysis from Traverse Legal notes: “YouTube operates as a critical gatekeeper controlling access to audiences and monetization for millions of creators worldwide.” They add that appeals route through internal forms with “no legal right to a conversation, and no guarantee that a person will ever review your case.”

Enderman made this exact point in his analysis: the only consistent way to reach a real person at YouTube is through a large Multi-Channel Network. If you are not part of one? Good luck.

And then there is the YouTube Partner Program itself. Monetized creators, those in the YPP, get access to features that non-monetized creators simply do not have. Partner Program members get a 7-day grace period for copyright strikes and access to Creator Support.

Non-monetized channels? They get automated flagging and a single appeal that may or may not ever see human eyes. YouTube has essentially created a caste system. And the lower castes are ruled entirely by algorithms.

Neal Mohan’s AI Optimism (And Why Creators Aren’t Buying It)

In December 2025, Time Magazine named YouTube CEO Neal Mohan their CEO of the Year. In his interview, Mohan defended YouTube’s use of AI moderation, telling the magazine: “AI will make our ability to detect and enforce on violative content better, more precise, and able to cope with scale. Every week, literally, the capabilities get better.”

The timing was, shall we say, not ideal.

This was the same week SplashPlate was getting his channel deleted for owning his own content. The same period when SpooknJukes, a horror game streamer, had his video demonetized because YouTube’s AI flagged a clip of him laughing as “violent graphic content.”

Yes. Laughing. The AI thought laughing was graphic content.

SpooknJukes posted: “I went into YouTube studio and edited out the clip of me laughing, now it’s fully monetized again… HAHAHAHA WHAT THE FUCK.” He then added: “Get your f**king sh*t together. You have an automated system this terrible, and you can’t even have the decency to have actual human beings review the appeals when they happen?”

MoistCr1TiKaL did not hold back in his response to Mohan’s AI optimism. According to Dexerto’s reporting, he called the CEO’s position “delusional” and said: “We haven’t seen anything positive on YouTube as a result of these AI tools that Neal speaks so highly of.”

He went further, as reported by Reclaim The Net: “AI should never be able to be the judge, jury, and executioner… Neal seems to have a different vision in mind.”

Mohan also suggested that AI tools would “revive YouTube’s early era” of enthusiastic amateurs making content. MoistCr1TiKaL’s response, as quoted by The Escapist: “That’s a very interesting perspective on it all, and I feel that’s one you can only achieve by huffing an endless amount of glue to deteriorate your brain to a level of delusion I find almost enviable.”

I laughed out loud reading that. Then I felt sad. Because he is not wrong.

Look, we know that YouTube has scale problems. They get hundreds of hours of video uploaded every minute. No human team could manually review all of it. But when your AI is deleting channels for owning their own content and flagging laughter as violent imagery, maybe the solution is not to double down on AI.

Maybe, just maybe, AI should flag content for human review rather than making termination decisions on its own.

As MoistCr1TiKaL put it: “If it wants to use AI for moderation, it should only be able to flag channels internally and then put that up the ladder for a human being to look at.”

That seems… reasonable? Like, not even radical. Just basic due process before you delete someone’s livelihood.

What Happens Now?

YouTube has shown no signs of changing course. If anything, the platform appears to be accelerating its AI-driven approach. But the creator community is pushing back, and some of that pushback is getting noticed.

In October 2025, YouTube launched a pilot program allowing some terminated creators to request new channels after a one-year waiting period. It is a small acknowledgment that sometimes the system gets it wrong. But the program excludes creators terminated for copyright infringement, which cuts out a lot of people who were wrongly linked to channels they had nothing to do with.

For now, the advice to creators is grim but practical: treat YouTube as a side hustle, not your entire business. Back up everything. Build audiences on other platforms. Do not assume your channel will be there tomorrow.

And if you do get terminated wrongly? Go loud. Very loud. Because right now, viral attention seems to be the only reliable path to getting a real human to look at your case.

That is not how platform governance should work. It should not be that the squeaky wheel, the viral tweet, the MoistCr1TiKaL signal boost, is what determines whether you get justice.

But here we are.

The Bottom Line

Twelve million channels were terminated in nine months. An unknown number of creators whose livelihoods vanished overnight. A CEO claiming the AI “gets better every week” while that same AI deletes people for laughing too hard.

Something has gone very wrong at YouTube.

And right now, I do not see anyone at the company who seems to understand that.

The platform that built its brand on “Broadcast Yourself” has become a place where an algorithm can broadcast you right out of existence. Where the content you created can be stolen, and you get punished for it. Where your appeal might be reviewed by a bot pretending to be human, using the same AI tools that wrongly terminated you in the first place.

Every creator on YouTube, every single one, is now playing a game where the rules are unknowable, the enforcement is automated, and the appeals process may be a fiction.

Neal Mohan calls this progress. The creators losing their channels call it something else.

I know which side I believe.

Sources

Dexerto, “YouTube responds to AI concerns as 12 million channels terminated in 2025” (December 11, 2025): https://www.dexerto.com/youtube/youtube-responds-to-ai-concerns-as-12-million-channels-terminated-in-2025-3292911/

Dexerto, “MoistCr1TiKaL blasts ‘delusional’ YouTube CEO for letting AI ban channels” (December 10, 2025): https://www.dexerto.com/youtube/moistcritikal-hits-out-at-delusional-youtube-ceo-over-ai-tools-claim-3292136/

Dexerto, “Pokemon YouTuber banned by AI finally gets channel back after creator stole his video” (December 10, 2025): https://www.dexerto.com/youtube/pokemon-youtuber-banned-by-ai-as-channel-steals-their-video-but-youtube-wont-budge-3291898/

Dexerto, “YouTube AI restricts streamer’s video after mistaking a laugh for ‘graphic content'” (December 10, 2025): https://www.dexerto.com/youtube/youtube-ai-restricts-streamers-video-after-mistaking-a-laugh-for-graphic-content-3292404/

Dexerto, “YouTube CEO says more AI moderation is coming despite creator backlash” (December 8, 2025): https://www.dexerto.com/youtube/youtube-ceo-says-more-ai-moderation-is-coming-despite-creator-backlash-3291243/

Dexerto, “Tech YouTuber irate as AI ‘wrongfully’ terminates account with 350K+ subscribers” (November 4, 2025): https://www.dexerto.com/youtube/tech-youtuber-irate-as-ai-wrongfully-terminates-account-with-350k-subscribers-3278848/

PiunikaWeb, “YouTube admits using AI to handle creator appeals and customer support” (November 10, 2025): https://piunikaweb.com/2025/11/10/youtube-admits-using-ai-to-handle-creator-appeals-and-customer-support/

PiunikaWeb, “Enderman loses YouTube channel to flawed AI moderation” (November 4, 2025): https://piunikaweb.com/2025/11/04/youtube-ai-error-terminates-enderman-channel/

PPC Land, “YouTube CEO defends AI moderation as creators lose channels overnight” (December 14, 2025): https://ppc.land/youtube-ceo-defends-ai-moderation-as-creators-lose-channels-overnight/

The Escapist, “MoistCr1TiKaL is crashing out after YouTube CEO defended AI moderation and content” (December 11, 2025): https://www.escapistmagazine.com/news-moistcr1tikal-ai-reaction/

Reclaim The Net, “AI Is YouTube’s New Gatekeeper” (December 11, 2025): https://reclaimthenet.org/ai-is-youtubes-new-gatekeeper

Sportskeeda, “Why was Enderman’s YouTube channel removed?” (November 5, 2025): https://www.sportskeeda.com/us/streamers/why-enderman-s-youtube-channel-removed-understating-platform-deleting-creators-accounts

Esports News UK, “Lazy YouTube AI tools ruin careers after 12m+ accounts deleted” (December 11, 2025): https://esports-news.co.uk/2025/12/11/lazy-automated-youtube-ai-moderartion-ends-careers-after-12m-accounts-deleted/

Clownfish TV, “YouTube Blames ‘Southeast Asia’ Scammers for Purging Over 7 Million Accounts” (December 11, 2025): https://clownfishtv.com/youtube-blames-southeast-asia-scammers-for-purging-over-7-million-accounts/

Traverse Legal, “YouTube Account Deactivation Signals the Rise of AI-Only Moderation” (December 5, 2025): https://www.traverselegal.com/blog/youtube-account-deactivation-ai-enforcement/

Nani Josh (@mister_manners_) X posts on YouTube terminations, December 2025

Enderman (@endermanch) X posts on Sprinklr and AI moderation, November 2025

SplashPlate (@SplashPlateVGC) X posts on content theft termination, December 2025

SpooknJukes (@SpooknJukes) X posts on graphic content flag, December 2025

TeamYouTube (@TeamYouTube) official responses on X, November-December 2025